Simple representation of concept drift sources

Simple representation of concept drift sources

We achieved $9^{th}$ rank in the hackathon.

The aim of the hackathon was to higlight bias in Twitter’s Saliency model.

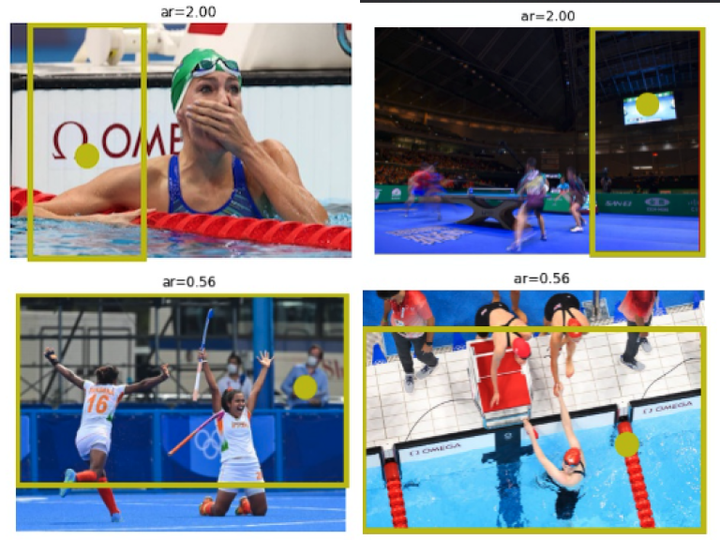

Twitter’s Saliency model is a model that is used to crop over-sized images to fit on the screen representing a thumbnail preview. The saliency model is responsible for selecting the most “salient” part of the image and that part is consequently kept in the thumbnail and the area surroinding it is cropped off.

We decided to chose, recently concluded at the time, Tokyo Olympics 2022 as a case study for highlighting bias. We downloaded around 5000 images across 10 different sports and ran the saliency model on top of them. We also passed these images through an object detection model to identify images with and without athletes.

We ran several studies, the main being classifying the images and the crops as something that was biased or not-biased. We also ran a sensitivity analysis and also tried observing changes between original coloured images and an image with a filter put on it (sepia and grayscale).

We found out that the model, in some cases, was biased towards text present in the image (which was mostly Tokyo 2020). We also found in several cases, where the main focus of the image, the athletes were not the ones selected as the most salient in the image, instead, the saliency model was predicting either someone from the audience or billboards / advertisements as the most salient. This report was submitted to Twitter and we got a thanks from the Twitter team for highlighting this bias in their model.